Systematic Experiment Management #1. Improving AI Model Performance

Systematic Experiment Management #1. Improving AI Model Performance

Hello, we are Seokgi Kim and Jongha Jang, AI engineers at MakinaRocks! At MakinaRocks, we are revolutionizing industrial sites by identifying problems that can be solved with AI, defining these problems, and training AI models to address them. Throughout numerous AI projects, we have recognized the critical importance of experiment management. This insight was particularly highlighted while working on an AI project for component prediction with an energy company. Our experiences led us to develop and integrate a robust experiment management function into our AI platform, Runway. In this post, we’ll share how we systematized our experiment management approach and our learnings from various AI projects.

Why is Experiment Management Essential?

The core of any AI project is the performance of the models we develop. Improving model performance requires a structured approach to conducting and comparing experiments. When developing models, we often test various methods by individual team members. It's crucial that these experiments are conducted under consistent conditions to allow for accurate performance comparisons between methodologies.

For instance, if a model developed using Methodology A with extensive data outperforms a model using Methodology B with less data, we can't conclusively say that Methodology A is superior without considering the data volume differences. Effective experiment management helps us control such variables and make more valid comparisons.

Moreover, team collaboration and client interactions often lead to changes in training data, complicating the comparison of models from different experiments. For example, if a client requests a last-minute change to the training data, it's essential to have detailed records of the previous data and its processing. Without this, comparing past and current experiments' performance becomes challenging.

The potential for confusion exists not only between different team members but also within your own experiments. As you work on a project and collaborate with clients, changes to the training data are inevitable, making it challenging to accurately compare the model performance of different experiments. For instance, if you conducted an experiment a month ago and the client requests a last-minute change to the training data, it's crucial to have detailed records of the previous data and its processing. Without these records, or if reproducing the old data is too difficult, comparing the performance of new experiments with old ones becomes nearly impossible.

An experiment management system is indispensable for ensuring the success of AI projects. Here are key reasons why it’s necessary:

- To deliver the best-performing model to clients, it’s crucial to compare the performance of multiple models accurately.

- The optimal model may vary depending on how the scenario or test set is constructed.

- When a high-performing model is developed, it should be possible to reproduce its training and performance evaluation.

- Different team members should achieve the same results when running experiments under the same conditions.

To address these needs, we established a set of principles for experiment management throughout the project and devised a systematic approach to manage them efficiently. Here’s how we created our experiment management system.

Organizing Experiment Management

To efficiently manage experiments in AI model development projects, we have organized our experiment management into three main areas:

- Data Version Management

- Managing data versions allows you to compare model performance by ensuring that only comparable experiments are evaluated together. This consistency is crucial for accurate performance assessments.

- Source Code Version Management

- By versioning the source code, it becomes easy to identify which methodology was used for each experiment through the corresponding code. This practice greatly enhances reproducibility, as you can simply revert to the specific version of the code to rerun an experiment.

- MLflow Logging Management

-

- We utilized MLflow to log the results of our experiments. This tool helps us organize experiments in a way that facilitates comparison, increasing the likelihood of uncovering insights to improve model performance.

With this structured approach to data versioning, it’s straightforward to compare the performance of two experiments if the data versions used are, for example, v0.X.Y and v0.X.Z. Since the test sets are identical, any differences in performance can be attributed to changes in the model or methodology rather than variations in the data.

Managing Source Code Versions in Model Development

In a model development project, maintaining a record of the different attempts to improve model performance is crucial. With continuous trial and error, the direction of the project often changes. Consequently, the source code is in a constant state of flux. Without proper source code management, reproducing experiments can become challenging, and team members might find themselves rewriting code when they attempt to replicate methodologies.

To address these challenges, we focused on maintaining a one-to-one correspondence between experiments and source code versions. We managed our source code using Git Tags, and here’s how we did it:

💡 Experiment Process for Source Code Version Control

1.Pull Request Merge

When a team member wants to try a new experiment, they write a pull request (PR) with the necessary code. The rest of the team reviews the PR, and upon approval, it is merged into the main branch.

2.Git Tag Release

After merging the branch, a new release is created. We create a Git tag for this release, including a brief description in the release note. This description outlines the experiments that have been updated or the new features added to enhance the experiment’s visibility compared to the previous version.

3. Logging Git Tags When Running Experiments

When running an experiment, it’s important to log the Git tag in MLflow. This practice makes it easy to track which source code version each experiment was run on. In our project, we log the source code version of each experiment as an MLflow tag named src_version in the MLflow run.

By following this process, each source code version is systematically generated and released as a Git tag.

Source code version v0.5.1 release note

On the MLflow screen, the source code version is logged as an MLflow Tag. This allows users to check the “Columns” section to quickly see which source code version each experiment was run on.

TAME-Data-v0.4.0 part of Experiment display

When using MLflow to manage experiments, the Git Commit ID is automatically logged with each experiment. However, there are distinct advantages to using Git Tags to specify and log source code versions.

💡 Benefits of Using Git Tags for Source Code Versioning in AI Projects

1. Easily Identify Source Code Verisons

When viewing multiple experiments in the Experiments list, Git Tags allow you to see at a glance which experiments were run with the same source code version.

2. Understand the Temporal Order of Experiments

With Git Commit IDs, determining the order of experiments can be challenging since the commit hashes are random strings. Although you can compare commits directly, it’s not straightforward. Git Tags, on the other hand, provide version numbers that make it easy to understand the sequence of experiments. This is particularly advantageous for projects in the model development phase, where the temporal order of experiments is crucial.

3. Document Source Code Versions with Release Notes

Using Git Tags comes with the natural benefit of release notes, which document each source code version. As experiments accumulate, having detailed release notes helps in tracking methodologies and understanding the progression of the project. This documentation is invaluable for both code management and project traceability, offering insights into the project timeline and the context of each version.

Managing MLflow Logging

As mentioned earlier, we use an open-source tool called MLflow to manage our experiments effectively, especially in an AI project with dozens to tens of thousands of experiments. Here’s an explanation of how MLflow is utilized to manage these experiments.

The key to recording experiments in MLflow is ensuring comprehensive and clear descriptions. Below is a detailed example.

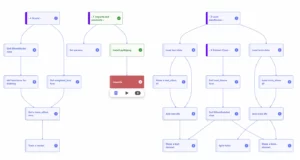

First, MLflow Experiments were created for each data version to facilitate performance comparisons. In the Description section of each MLflow Experiment for a data version, a brief description of the data version is provided.

Below is one of the example screens for MLflow Experiment.

Example MLflow screen for Data Version v0.4.0

In the Description section of the MLflow screen for Data Version v0.4.0, the following details are included:

- Experiment Overview

- A brief summary highlighting the unique aspects of this data version.

- A link to the relevant Notion page, explaining why this data version was defined and the purpose of the experiment.

- Data Overview

- Information about the number of data samples.

- Details of the Train/Test/Val split for that data version.

- Compatible Source Code Versions

- A list of compatible source code versions. As features are added or removed throughout the project, this list ensures reproducibility by providing quick reference to the appropriate source code versions.

Will this help us manage experiments perfectly if we write detailed descriptions? As the model evolves and multiple data versions emerge, finding the right experiment can become time-consuming. To address this, experiments are recorded in separate spaces based on their purpose, making it easier to locate specific experiments later.

To organize experiments according to their purpose, the following spaces were created:

Separated experimental spaces based on the purpose of the experiment

[Type 1] Project Progress Leaderboard

This space records experiments to show the overall progress of the project. As the data version changes, experiments that perform well or are significant for each version are recorded here, providing a comprehensive overview of the project’s development.

[Type 2] Data Version Experiment Space

This is the main experiment space where all experiments are recorded according to the data version. With a focus on comparing model performance across experiments, an experiment space is created for each data version. Detailed information about the data used to train the model, model parameter values, and other relevant details are recorded to ensure the reproducibility of experiments.

[Type 3] Experiment Space for Test Logging

The test logging space serves as a notepad-like environment. It is used to verify whether experiment recording works as intended and is reproducible during source code development. This space allows for temporary, recognizable names (like the branch name being worked on). Once test logging confirms that an experiment is effective, the details are logged back into the main experiment space, the “[Type 2] Data Version Experiment Space.”

[Type 4] Hyperparameter Tuning Experiment Space

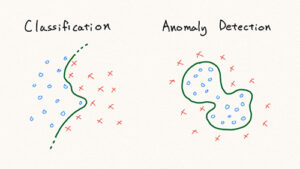

All hyperparameter tuning experiments for the main experiments are logged in this separate space. This segregation ensures that the main experiment space remains focused on comparing the performance of different methodologies or models. If hyperparameter tuning experiments were logged in the main space, it would complicate performance comparisons. Once a good hyperparameter combination is found, it is applied and logged in the main experiment space. Logging hyperparameter exploration helps in analyzing trends, which can narrow down the search space and increase the likelihood of finding better-performing hyperparameters. For example, here's a graph that analyzes the trend of depth, one of the hyperparameters in the CatBoost model.

Trend analysis graph of the depth hyperparameter in CatBoost models

When we set the search space of depth to 2–12, the resulting trend analysis graph revealed a positive correlation between the depth and the validation set performance, as indicated by the Pearson correlation coefficient (r). This analysis suggests that higher values of depth tend to yield better performance. Therefore, a refined strategy would be to narrow the search space to values between 8 and 11 for further tuning.

Separating the experimental spaces based on the purpose of the experiment not only makes it easier to track and find specific experiments but also, in the case of hyperparameter tuning, helps in uncovering valuable insights that can significantly improve model performance.

Assessment on Organizing Experiment Management

In our AI project, organizing experiment management became a crucial task. Here's what we learned about structuring our experiment management and how it has helped us advance the project.

What Worked Well: Seamless Collaboration and Efficient Comparison of Experiment Methodologies

With an organized experiment management system, collaboration across the team became more seamless. For instance, a teammate might say, “I tried an experiment with Method A, and here are the results,” then share a link to the MLflow experiment. Clicking the link reveals a detailed description of Method A, the data version used, and the source code version. This setup allows me to read the description, modify the code in that specific source code version, and run a similar experiment with slight variations. Without this system, re-implementing Method A would be inefficient, potentially leading to inconsistencies and difficulties in comparing the performance of newly developed models.

Beyond facilitating collaboration, this approach also helps us identify directions to improve model performance. By comparing the average performance between methodologies, we can focus on comparable experiments rather than sifting through a disorganized collection of experiments.

What To Improve: Inefficiencies and Human Error

While our experiment management system improved organization, we also focused on maintaining source code versions for all experiments. The model development cycle typically followed this pattern: conceive a methodology → implement the methodology in code → submit a pull request → review the pull request → run the experiment. However, this cycle sometimes introduced inefficiencies, especially when quickly trying and discarding various methodologies. To address this, setting clear criteria for quick trials and establishing exception cases can help avoid unnecessary processes and streamline experimentation.

Additionally, we created a src_version key in the config file to log the source code version in MLflow and recorded it as an MLflow Tag. However, manual modifications to the config file during experiments led to human errors. Automating this process as much as possible is essential to minimize errors and enhance efficiency.

Closing Thoughts

In this blog, we’ve discussed the importance of experiment management and how creating an effective experiment management system helped us successfully complete our project. While the core principles of AI projects remain consistent, each project may have slightly different objectives, so it’s beneficial to customize your experiment management system to suit your specific needs. In the next post, we’ll share more about how MakinaRocks’ experiment management expertise is applied to our AI platform, Runway, to eliminate repetitive tasks and increase the efficiency of experiments.