Enhancing Predictive Maintenance with Machine Learning

Enhancing Predictive Maintenance with Machine Learning

You can’t talk about the industrial sector without talking about industrial machinery. Neither can we. At MakinaRocks, we aim to make industrial technology intelligent and deliver it as transformative solutions.

We believe in tailoring our AI solutions to meet the needs of our clients, which not only includes increased technical specifications involving efficiency and performance metrics, but something just as important: convenience.

There are a number of ways to maintain industrial machinery. If you search around, you will most likely come across these terms: preventive maintenance and predictive maintenance.

While both refer to preventing industrial machinery breakdown (and factory shutdowns – yikes!), preventive maintenance generally involves scheduling an expert to come down to your factory once every few months to take a look at your machinery and see if anything needs fixing. On the other hand, predictive maintenance refers to using data to predict when the machine will break down, so you can call in an expert prior to the anticipated breakdown. MakinaRocks solutions take the concept of predictive maintenance a few steps further.

What we’ve built is a predictive maintenance solution based on AI—excelling in accuracy and performance.

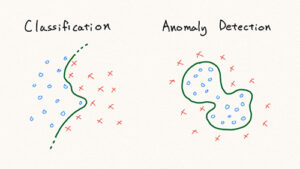

In this post, we will explain our novel anomaly detection metric behind our state-of-the-art anomaly detection solution: Reconstruction along Projection Pathway (RaPP), a concept acknowledged by the International Conference on Learning Representations (ICLR) in 2020.

What is RaPP?

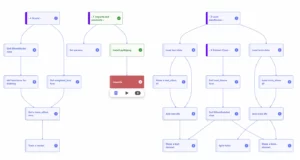

RaPP is our proposed anomaly detection metric for autoencoders—drastically improving the anomaly detection performance without changing anything about the training process.

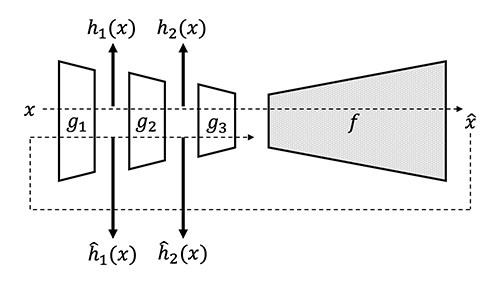

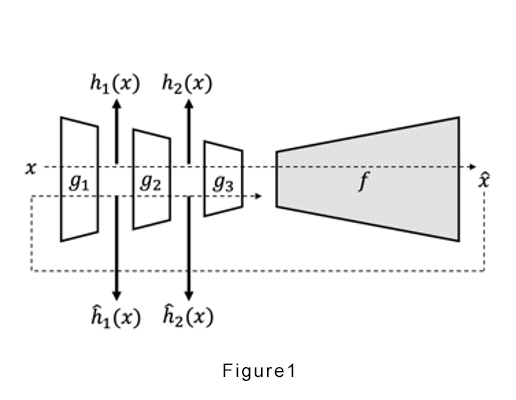

RaPP redefines the anomaly detection metric by enhancing the reconstruction process. Reconstruction is the difference between the input and output of an autoencoder. RaPP extends this concept to what we refer to as the hidden layers in our paper. This is done by feeding the initial output to the (very same) autoencoder again and aggregating the intermediate activation vectors from the hidden layers.

SAP & NAP

To reiterate, RaPP enables the comparison of the output values produced in the encoder and decoder’s hidden spaces. We implemented two different methods to measure performance: RaPP’s Simple Aggregation along Pathway (SAP), and RaPP’s Normalized Aggregation along Pathway (NAP).

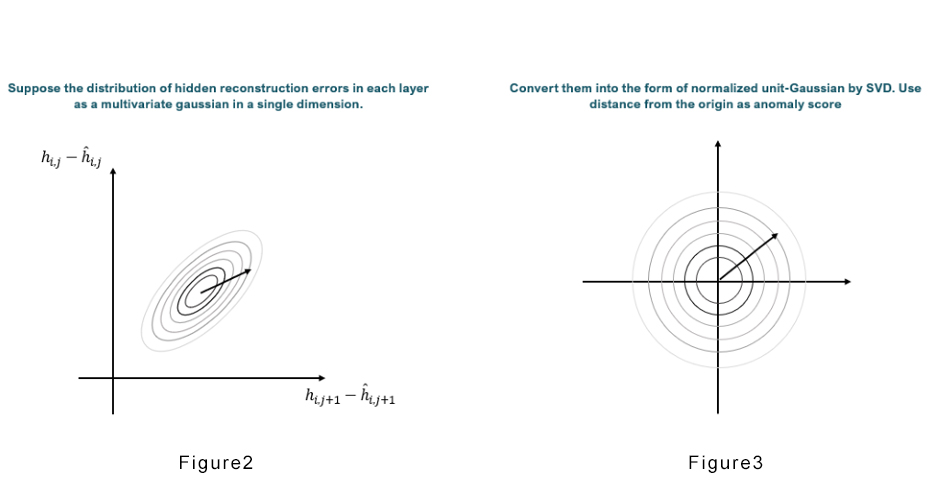

Figure 2 exemplifies SAP, but when the distribution is so, it merely depicts the distance from the origin. A more suitable approach would be to calculate the distribution as exemplified in figure 3, which is equivalent to achieving the Mahalnobis distance. To do this, we can attain a normalized distance by applying Singular Value Decomposition (SVD) to the hidden reconstruction error of each layer from the training set. This concept is also known as RAPP’s NAP.

With SAP and NAP, a more accurate anomaly detection process may be performed by producing an anomaly score with the scalar value from the difference between multiple layers. Please refer to our paper for a more in-depth look at our methodology.

Our RaPP results

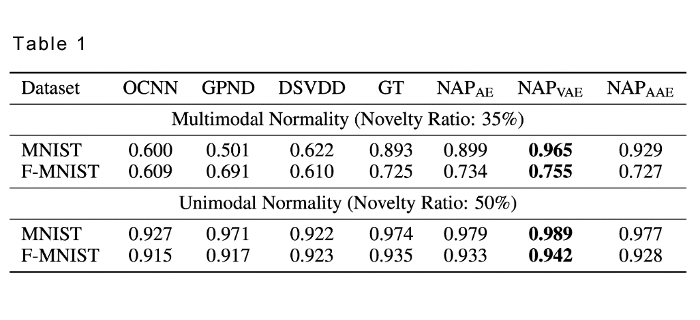

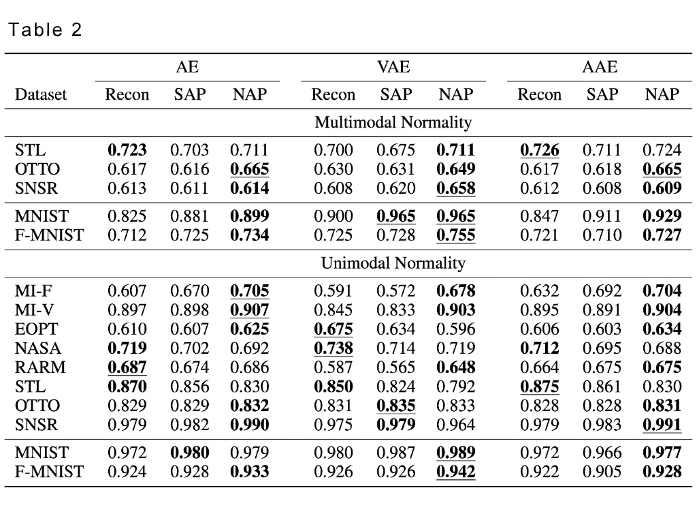

We performed numerous experiments to verify the effectiveness of RaPP. We began by using widely known and accepted datasets such as MNIST and FMNIST to compare the performance of our model with research from published, peer edited papers. The results are as follows:

As you can see from the results, when NAP is applied to different autoencoders, we found that our results were far superior to the results in published papers. Namely, when NAP was applied to Variational Autoencoder (VAE), we were able to see the most significant results.

Further, we were able to prove the effectiveness of RaPP in multimodal normality cases as shown in the results below.

Upon implementation of RaPP, all but STL (steel) showed improvement in performance in multimodal normality cases. RaPP also proved to be more effective in 6 out of 10 instances in unimodal normality cases.

MakinaRocks’s transformative solution for predictive maintenance: ADS

Our RaPP anomaly score metric is featured in our Anomaly Detection Suite (ADS), empowering the solution to predict machinery breakdown with increased accuracy.

For more information about ADS, contact us at contact@makinarocks.ai

Want to know what we do? Visit us at www.makinarocks.ai

To read our ICLR 2020 accredited RaPP paper: https://iclr.cc/virtual_2020/poster_HkgeGeBYDB.html

References

[1] Ki Hyun Kim et al., RaPP: Novelty Detection with Reconstruction along Projection Pathway, ICLR, 2020

[2] Lei et al., Geometric Understanding of Deep Learning, Arxiv, 2018

[3] Stanislav Pidhorskyi et al., Generative Probabilistic Novelty Detection with Adversarial Autoencoders, NeurIPS, 2018

[4] Kingma et al., Auto-Encoding Variational Bayes, ICLR, 2014

[5] Makhzani et al., Adversarial autoencoders. Arxiv, 2015.

[6] Raghavendra Chalapathy et al., Anomaly detection using one-class neural networks. arXiv preprint arXiv:1802.06360, 2018.

[7] Lukas Ruff et al., Deep one-class classification. In ICML, 2018.

[8] Izhak Golan and Ran El-Yaniv. Deep anomaly detection using geometric transformations. NIPS, 2018.

[9] Ki Hyun Kim, Operational AI: Building a Lifelong Learning Anomaly Detection System, DEVIEW, 2019