The need for AI-driven anomaly detection in flight test data

During aircraft development, flight testing is critical for verifying performance and safety—but conducting tests in actual flight environments introduces significant risk. To mitigate this, integrated flight tests are carried out on the ground using simulators that replicate realistic flight conditions, allowing repeated scenario testing in a controlled setting. These environments generate rich, high-fidelity data—ideal for training AI models to distinguish between normal and abnormal operations. However, traditional monitoring systems can only detect predefined anomalies. Engineers needed a system that could not only identify known faults but also explain their root causes and detect previously undefined “unknown” anomalies.

Building an explainable and scalable AI anomaly detection system

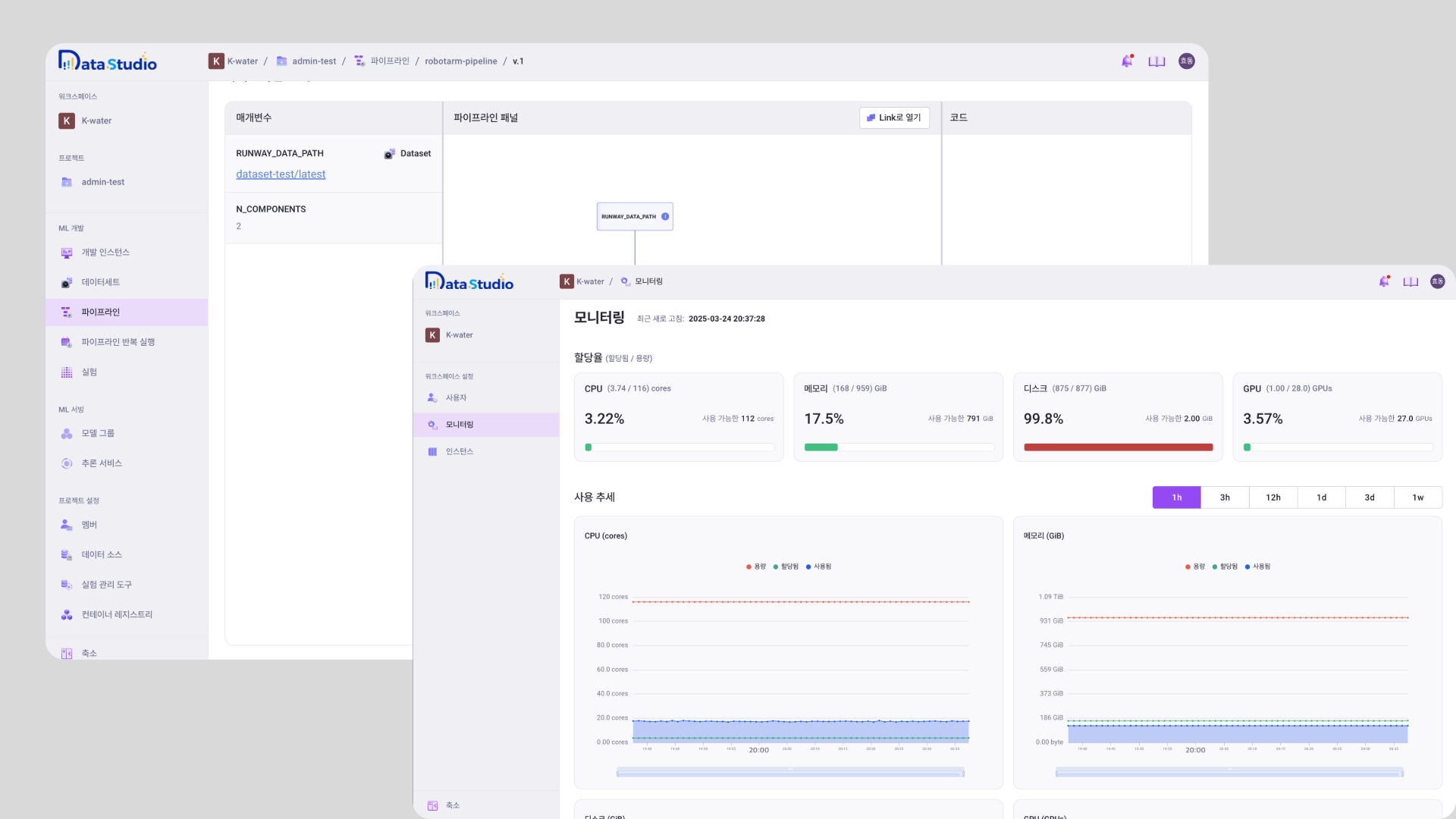

A Transformer-based time-series classification model was developed to predict flight states across four categories—normal, warning, dangerous, and fail. To detect unknown anomalies not included in training data, the OpenMax technique was applied. Using Integrated Gradients, the system provided interpretable visualizations of key contributing variables, allowing engineers to understand the cause of each detected anomaly. An end-to-end MLOps environment was established on MakinaRocks Runway Lite(AI platform), automating model development, deployment, and monitoring to ensure continuous improvement and operational reliability.

Faster response and improved decision-making accuracy

The model achieved an overall F1-score of 0.9946 (Normal: 0.9985, Warning: 0.9766, Dangerous: 0.9796, Fail: 0.9737) for known anomaly detection. Real-time visualization of contributing variables enabled engineers to quickly pinpoint root causes and respond to anomalies with greater speed and confidence. The system also demonstrated the ability to detect previously undefined anomalies, strengthening both the resilience and intelligence of flight testing operations.