AI agents emerged to close the gap between the promise of powerful AI technology and the underwhelming experiences many real users still face. Generative AI—led by breakthroughs like ChatGPT—is quickly becoming indispensable in our daily lives and workplaces. But the more specific and domain-relevant the task, the more likely you are to hit a wall: the answer isn’t quite right the first time.

Take service planning, for example. When using generative AI, most users tend to approach it in one of two ways to get the answer they’re looking for. Some dig deeper, asking follow-up questions about timelines, cost factors, or deliverables until the AI produces a response detailed enough to act on. Ironically, this back-and-forth process can feel more time-consuming than handling the task manually. Others take a different approach: they rephrase the same question over and over, hoping the AI will eventually “get it right.” But often, the AI responds differently each time, leading to frustration and eroding trust in its reliability.

Interestingly, this trial-and-error process mirrors how AI agents operate—except agents take it a step further. Built on an agentic system, AI agents automate complex tasks that once required manual human effort. They coordinate Large Language Models (LLMs) to understand intent, retrieve relevant information, and assemble specific, step-by-step solutions.

Related content

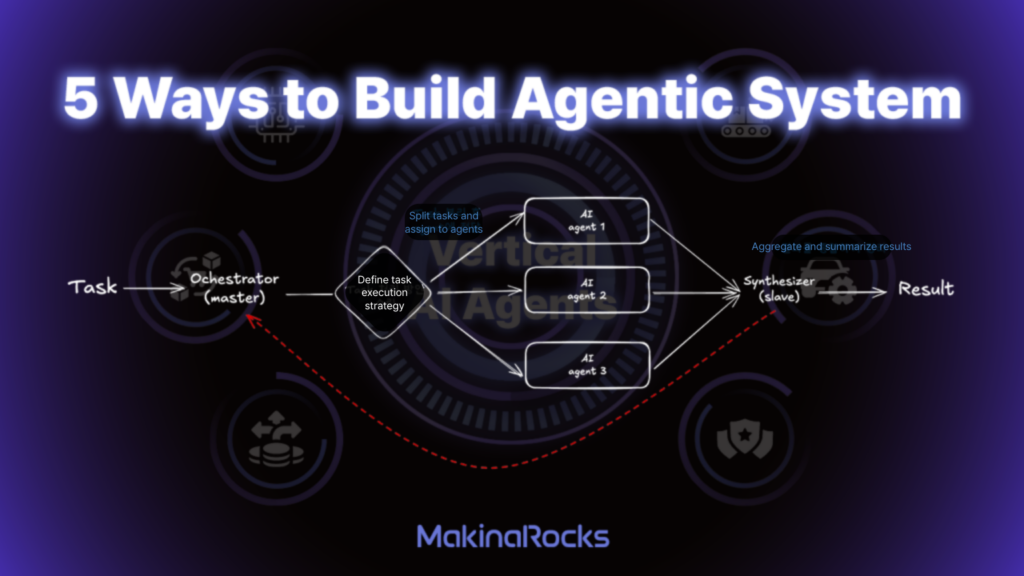

At its core, an agentic system is about enabling multiple AI agents to operate independently—yet collaboratively—toward a unified goal. Think of it like a team of specialists, each handling a distinct part of the problem, yet aligned under a shared objective. Just as no two business problems are solved the same way, there are many ways to design an agentic system. Below, we explore five of the most common approaches to building effective agentic systems—and when to use each.

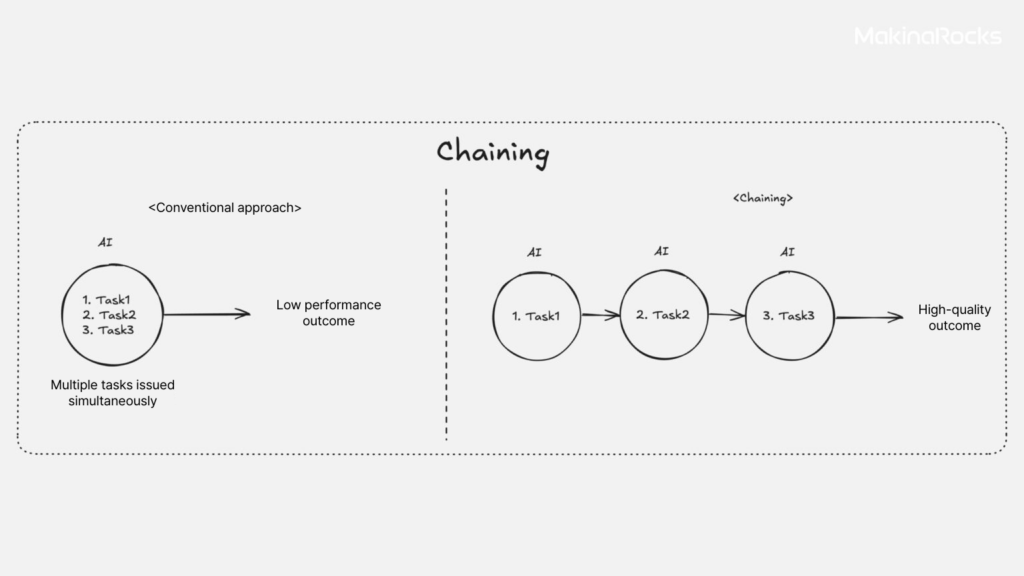

1. Chaining

Chaining structures tasks into sequential steps, where each step builds on the output of the previous one.

Chaining is a core technique used in AI agent, where complex tasks are broken down into smaller, manageable steps and executed sequentially. It mirrors the iterative way humans often ask follow-up questions to get more precise answers—but chaining provides a more structured and efficient approach. In a manufacturing context, this approach can evolve into a vertical AI agent system—forming a manufacturing-specific chaining system. This setup breaks down complex manufacturing workflows into discrete steps, allowing AI agents to handle each phase systematically. Take quality control as an example. In a typical inspection process, tasks must be completed in a precise sequence:

- Analyze root causes and propose corrective actions

- Define the specifications and quality standards of the item

- Establish inspection methods aligned with those standards

- Detect anomalies based on inspection outcomes

By clearly separating each step, organizations can reduce errors, improve traceability, and make better decisions backed by accurate, step-by-step analysis. Outputs from each phase serve as vital inputs for the next, creating a feedback loop that enhances the overall effectiveness of the quality management system. This structured approach not only minimizes mistakes but also delivers more consistent and reliable outcomes.

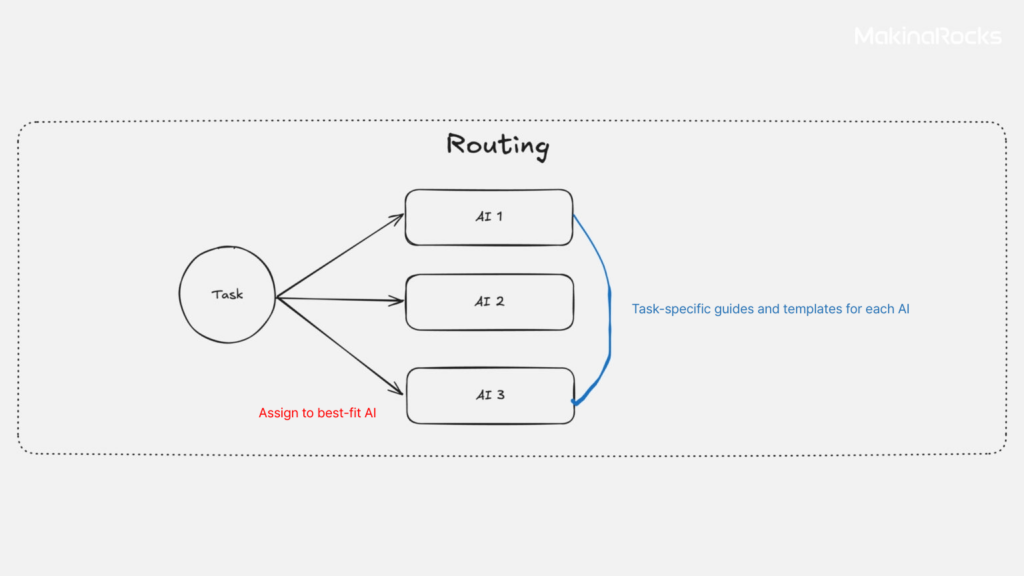

2. Routing

Routing assigns tasks to the appropriate paths and ensures each one uses the optimal resources for execution.

Routing is the process of assigning tasks to the agents best equipped to handle them—much like how organizations delegate work to domain specialists. Unlike a general-purpose LLM that provides broad but shallow responses, specialized AI agents are trained on domain-specific data and equipped with tailored guides and templates. This enables them to deliver deeper insight and more accurate results for the tasks they’re designed to handle.

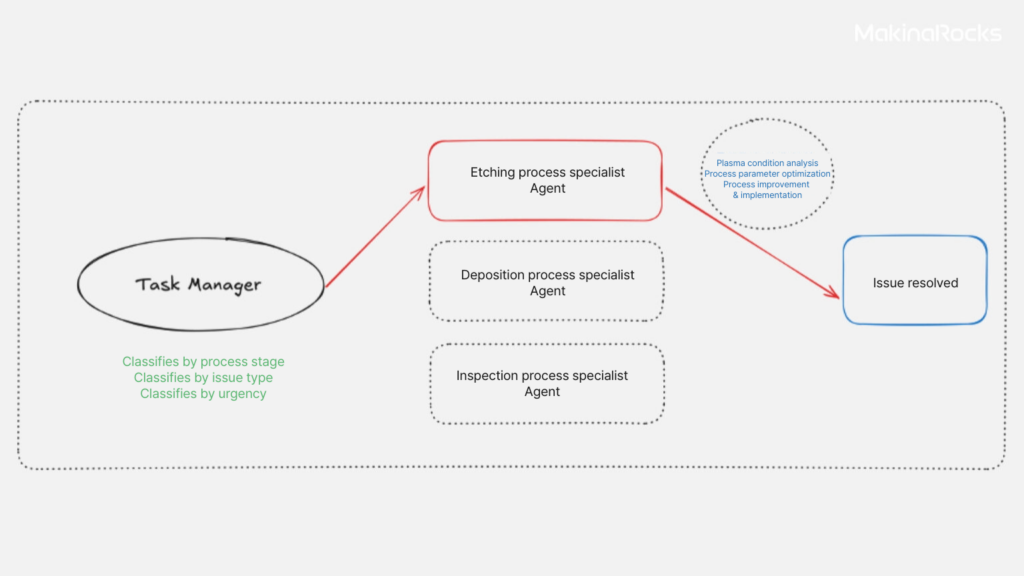

Example of a vertical Ai agent routing system configured for a semiconductor production line.

Let’s consider a hypothetical vertical AI agent routing system applied to a semiconductor production line. At the center of the system is a task manager, which coordinates operations. It uses predefined criteria—such as process stage, problem type, and urgency level—to determine which specialized agent is best suited for each task. Let's say the system includes: 🔼etching process specialist agent 🔼deposition process specialist agent and 🔼inspection process specialist agent. Now, imagine an issue is reported: “excessive depth deviation during the etching process.” The task manager analyzes the problem and classifies it as a quality concern specific to the plasma etching process. Based on this, it routes the task to the etching process specialist agent.

This agent then executes a structured sequence of tasks: 1) analyze plasma conditions 2) optimize process parameters 3) develop and implement corrective measures. In this setup, routing ensures that the task is delegated to the most capable agent, who then resolves the issue independently. Unlike parallelization or orchestration, where multiple agents collaborate to produce a unified outcome, routing is about targeted delegation—assigning a task to a single, best-fit agent without further coordination.

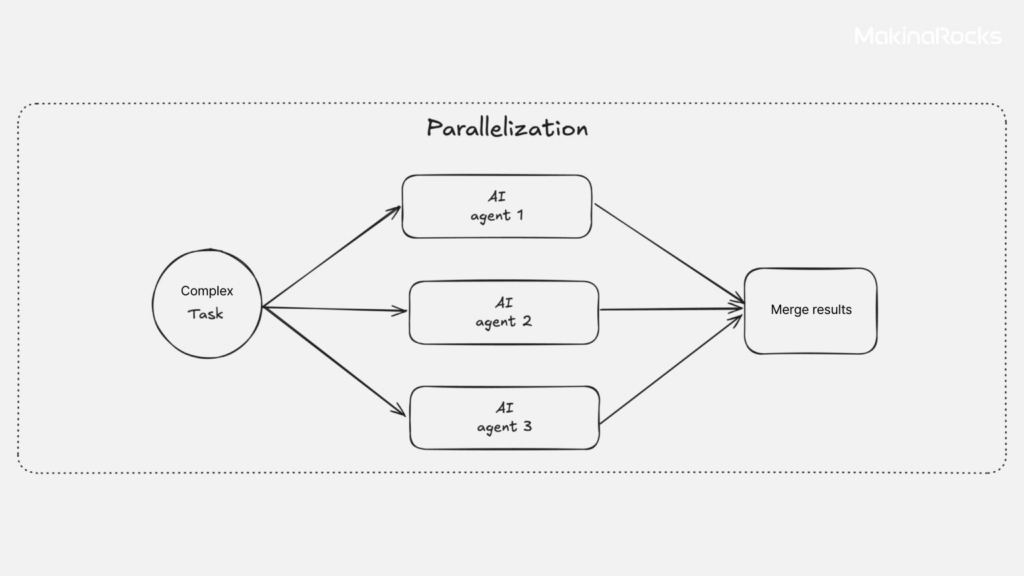

3. Parallelization

Parallelization boosts system efficiency by executing multiple tasks at the same time.

Parallelization is a method in which multiple AI agents work simultaneously to increase the speed, accuracy, and overall efficiency of task execution. In agentic systems, parallelization is typically implemented through two main approaches: ‘sectioning’ and ‘voting.’

Sectioning involves dividing a single large task into smaller, independent subtasks that can be handled concurrently by different AI agents. For example, in a large-scale code review, one agent might focus on identifying security vulnerabilities, another on performance optimization, and a third on code style and readability. Each agent leverages its area of expertise, and by working in parallel, the overall review process becomes faster and more precise.

Voting, on the other hand, assigns the same task to multiple agents independently. Each agent produces its own result, and the final decision is made by aggregating those outcomes. A practical example is a content moderation system: several agents assess whether a piece of content is appropriate, and the results are combined to ensure a balanced and reliable judgment.

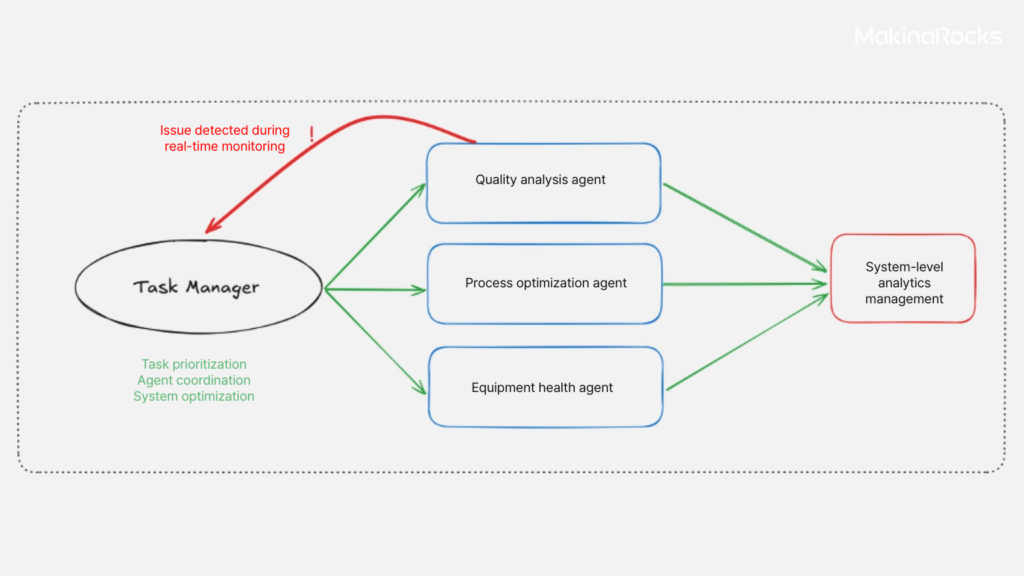

Example of a vertical AI agent parallelization system applied to an automotive transmission production line.

Now, let’s consider a vertical AI agent parallelization in the context of an automotive transmission production line, using the sectioning approach. Suppose a quality analysis agent detects an abnormal increase in gear dimensional deviation during real-time monitoring. The issue is escalated to the task manager, which evaluates task urgency and immediately assigns relevant specialized agents to respond—each handling a different aspect of the issue in parallel. For instance, a quality analysis agent analyzes real-time data to identify the source of the deviation. At the same time, a process optimization agent reviews and adjusts process parameters, while an equipment health agent performs diagnostics to assess the condition of the machine involved. Each agent contributes its specialized insight, and their findings are collectively analyzed to enable faster and more accurate corrective action.

A parallelized agentic system like this delivers both quantitative and qualitative benefits. Quantitatively, it helps reduce quality defect rates, improve preventive maintenance execution, and increase overall productivity. On the qualitative side, it supports the creation of an integrated and responsive quality management system, enhances equipment reliability, and strengthens data-driven decision-making across operations. By building a vertical AI agent parallelization system anchored by the task manager, organizations can configure a central control system, design specialized agents and collaboration frameworks, and implement a scalable architecture.

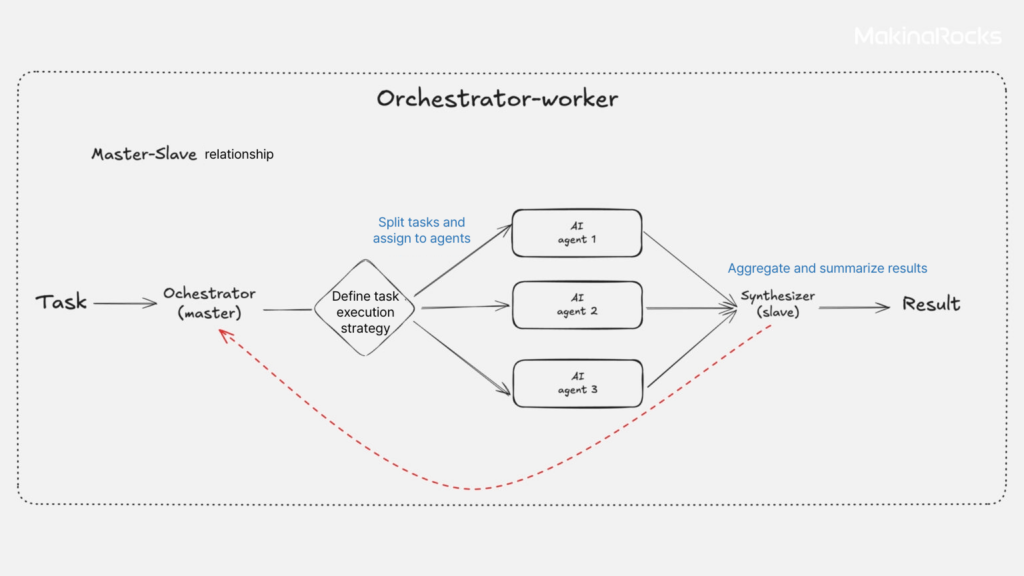

4. Orchestrator-workers

A centralized orchestrator manages and dynamically assigns tasks to specialized workers for efficient executions.

The orchestrator-worker architecture enables intelligent task distribution by placing a centralized AI—the orchestrator—at the core of decision-making. This orchestrator analyzes high-level goals, breaks them down into manageable subtasks, and dynamically assigns these subtasks to specialized worker AIs. Each worker AI then independently executes its assigned task and reports the results back to the orchestrator for integration, validation, and further coordination. At first glance, this structure may appear similar to parallelization. However, the key distinction lies in its flexibility and adaptability. While parallel systems typically follow a fixed distribution of tasks, orchestrator-worker systems allow real-time adjustments based on task complexity, dependencies, and execution progress.

Let’s look at a real-world example: imagine applying an orchestrator-worker system to smart factory operations. In this setting, the orchestrator takes charge of the entire production process—analyzing the tasks required at each stage of the line, determining priorities, and identifying dependencies between steps. Based on this analysis, it develops a task distribution plan and assigns work efficiently across the system: one worker AI may focus on product assembly, another on quality inspections, and a third on packaging. Each worker operates autonomously, but all remain connected through the orchestrator’s real-time oversight.

If an issue arises—such as a delay caused by a failed quality check—the orchestrator can immediately adjust. It might reprioritize that task, allocate additional workers to resolve the bottleneck, or reorganize the workflow entirely. This ability to dynamically reassign and adapt makes the orchestrator-worker model especially effective in complex and fast-changing environments.

By continuously monitoring progress and reevaluating task relationships, the orchestrator ensures operations remain coordinated, agile, and efficient. Unlike static parallelization, this architecture supports truly adaptive decision-making, allowing the AI system to evolve in real time.

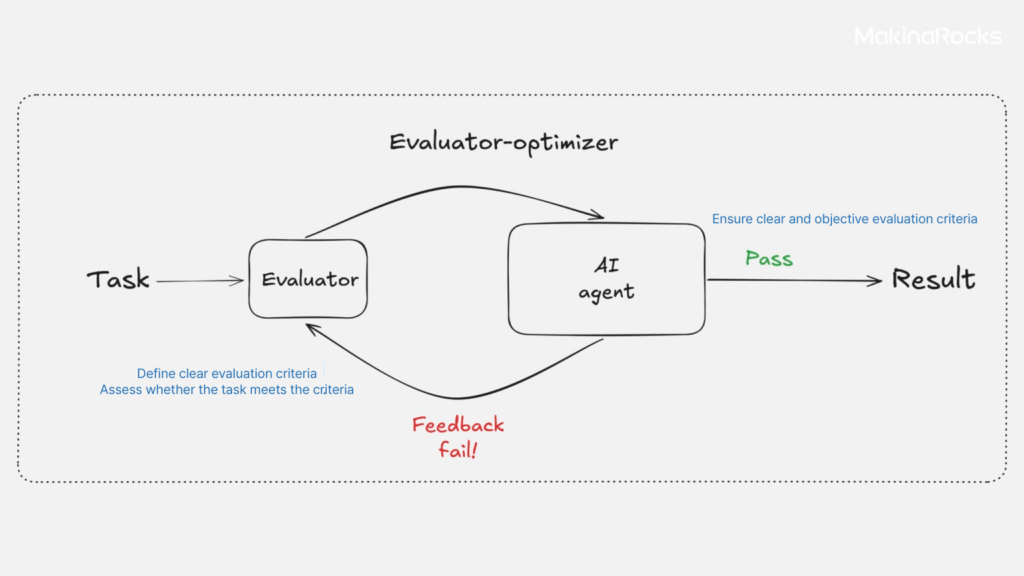

5. Evaluator-optimizer

Evaluator-optimizer enables iterative improvement by evaluating system performance and optimizing based on feedback.

The evaluator-optimizer structure is an iterative framework in which the evaluator Ai assesses results, and the optimizer AI makes adjustments based on that feedback. This architecture is particularly well-suited for processes with many variables or those that require constant fine-tuning.

Take quality control, for example. The evaluator AI inspects product output to assess defect rates, while the optimizer AI adjusts conditions like temperature, pressure, or speed to improve outcomes. This feedback loop continues until optimal performance is achieved—and can be maintained continuously. The same approach can be used to improve equipment efficiency: the evaluator monitors factors like utilization, failure frequency, or energy consumption, while the optimizer refines operating parameters, inspection intervals, and maintenance schedules. The key strength of the evaluator-optimizer system lies in its ability to drive continuous improvement. However, successful implementation requires clearly defined evaluation criteria, enough iteration time, and an evaluator that is both objective and domain-aware.

More broadly, this pattern reflects what makes agentic AI systems powerful: the ability to autonomously handle complex, dynamic tasks. And building such autonomy is often easier than it seems. For instance, a large language model (LLM) can choose tools and refine actions based on feedback—repeating this loop until a goal is reached. This makes agentic systems especially valuable for tasks with unpredictable steps, multi-stage decision-making, or scenarios where scalable automation is needed.

So, which agentic system should you adopt? The answer depends on the problem you're trying to solve. If you're working with vertical AI agents to address specific business challenges, the best architecture is one that fits both your data and your domain. That often means collaborating with an AI expert to design a tailored solution around your unique workflow.

In the next post, we’ll dive into a real-world case study of how MakinaRocks deployed a vertical agentic system. Until then, if you’re considering how to apply domain-specific AI in your operations, feel free to reach out through the banner below—we’d be happy to help.

Note: This post was translated from the original Korean version by Kyoungyeon Kim.